Write a numbered list of the three most interesting things that you observed while peforming your experiments in the w7a check your understanding section.

Each of your points should only be one or two sentences. While we hope that you found out more than three things, please do not list more than three.

Please compare your answers in your tutorial. In particular, discuss anything that you found surprising or confusing.

Learning a transformation:

The \(K\)-nearest-neighbour (KNN) classifier predicts the label of a feature vector by finding the \(K\) nearest feature vectors in the training set. The label predicted is the label shared by the majority of the training neighbours. For binary classification we normally choose \(K\) to be an odd number so ties aren’t possible.

KNN is an example of a non-parametric method: no fixed-size vector of parameters (like \(\bw\) in logistic regression) summarizes the training data. Instead, the complexity of the function represented by the classifier grows with the number of training examples \(N\). Often, the whole training set needs to be stored so that it can be consulted at test time. (Gaussian processes are also a non-parametric method.)

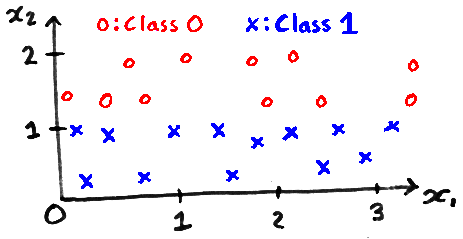

How would the predictions from regularized linear logistic regression, with \(P(y\te1\g\bx,\bw,b) = \sigma(\bw^\top\bx+b)\), and 1-nearest neighbours compare on the dataset below?

Answer:

Regularized linear regression would fit a decision boundary roughly along the line \(x_2=1.1\). The confidence of its predictions depend on the size of regularization used. KNN does not give a straight decision boundary. In particular it will classify points roughly around \((1.2,1.3)\) and \((3,1.3)\) as class 1, although those locations appear to be in the general band of class 0 points. The KNN classifications don’t come with a measure of uncertainty.

Non-parametric methods can have parameters. We could modify the KNN classifier by taking a linear transformation of the data \(\bz = A\bx\), and finding the \(K\) nearest neighbours using the new \(\bz\) features. We’d like to learn the matrix \(A\), but for \(K\te1\), the training error is 0 for almost all \(A\) (the nearest neighbour of a training point is itself, which has the correct label by definition).

A loss function that could evaluate possible transformations \(A\) is the leave-one-out (LOO) classification error, defined as the fraction of errors made on the training set when the \(K\) nearest neighbours for a training item don’t include the point being classified itself.

The identity transformation, which does nothing, is: \[A = \begin{bmatrix} 1 & 0\\ 0 & 1\\ \end{bmatrix}. \] Write down a matrix \(A\) where the 1-nearest neighbour classifier has lower LOO error than using the identity matrix for the data above. Explain why your matrix works in about three sentences.

Explain whether we can fit the LOO error for a KNN classifier by gradient descent on the matrix \(A\).

Answer:

An infinitesimal perturbation of almost all matrices \(A\) won’t change which points are the \(K\) nearest neighbours of any other points. Therefore all of the classification decisions will be the same, and the derivatives of the LOO score with respect to \(A\) are usually all zero. A gradient-based optimizer has no signal with which to adjust the transformation and will do nothing.

In edge cases, two neighbours could be nearly the same distance away, and a small change in \(A\) could change which of them is the nearest neighbour. The LOO score may change by a finite amount for an infinitesimal change, making the gradient undefined or infinite. So no, we can’t fit \(A\) with gradient methods.

For keen students: If we can create a loss function that’s a bit like the LOO error but differentiable, then we can fit \(A\) to that loss. For interest, that has been done: https://papers.nips.cc/paper/2566-neighbourhood-components-analysis

Assume that we have implemented some other classification method where we can evaluate a cost function \(c\) and its derivatives with respect to feature input locations: \(\bar{Z}\), where \(\bar{Z}_{nk} = \pdd{c}{Z_{nk}}\) and \(Z\) is an \(N\ttimes H\) matrix of inputs.

We will use that code by creating the feature input locations from a linear transformation of some original features \(Z = XA^\top\). How could we fit the matrix \(A\)? If \(A\) is an \(H\ttimes D\) matrix, with \(H\!<\!D\), how will the computational cost of this method scale with \(D\)?

You may quote results given in lecture note w7d.

Answer:

Adapting the rule for matrix products given in the lecture notes, we can backpropagate a derivative through a matrix product \(Z=XA^\top\) with \(\bar{A} = \bar{Z}^\top X\). We now have the derivatives of the cost function with respect to the transformation matrix \(A\). We can use these derivatives in a gradient-based optimizer to fit \(A\).

There will probably be local optima, so we may wish to randomly initialize \(A\) multiple times to find a good local optimum.

We are taking \(D\)-dimensional features, reducing them to \(H\)-dimensions and then running the classifier. The only stage whose cost depends on \(D\) is the dimension-reducing step, as then the original data are discarded. Each of \(Z=XA^\top\) and \(\bar{A} = \bar{Z}^\top X\) cost \(O(NDH)\). So any method with a classifier following a linear dimension reducing step should scale linearly with the original input dimensionality.

For keen students: Equation (5) in the NCA paper mentioned in the previous part costs \(O(D^2)\). Really keen students would work out how to fix the computation described in the paper to scale as \(O(D)\).

Intended lessons of Q2:

Anyone doing machine learning should know what KNN is. It can be a hard method to beat if you have a reasonable way to measure distance. An exam question could describe a method like KNN and ask you to compare it to methods that we have spent time on in the class: for example you might be expected to comment on complexity, storage requirements, and a comparison of the functions that the methods can represent.

This exercise also gives practice in core parts of the course: thinking about linear transformations, and what they can do to data, and applying derivative rules that have been given to you: demonstrating you understand the high-level picture of reverse-mode differentiation and its consequences.

Finally, there is a big picture lesson for fitting models. If you want to learn one or two parameters, you can cross-validate choices made at random or on a grid. However, it’s hard to explore the space of many parameters (like a whole \(A\) transformation matrix) that way, and gradient methods are often a good alternative. However, (standard) gradient-based optimizers need differentiable functions, and not all cost functions are differentiable.

For keen students only (non-examinable): the answers reference a research paper that has a clever trick for making a differentiable cost function. For comparison, taking a hard step function in a neural network and making it a differentiable sigmoid function means we can fit neural networks with gradient methods. Research on architectures like “neural Turing machines” and “memory networks”, and work surrounding the “concrete distribution”, are also driven by finding differentiable cost functions for interesting tasks.

Linear autoencoder

We centre our data so it has zero mean, and fit a linear autoencoder with no bias parameters. The autoencoder is a \(D\)-dimensional vector-valued function \(\bff\), computed from \(D\)-dimensional inputs \(\bx\), using an intermediate \(K\)-dimensional “hidden” vector \(\bh\): \[\begin{align} \notag \bh &= W^{(1)}\bx\\ \notag \bff &= W^{(2)}\bh. \end{align}\] Assume we want to find a setting of the parameters that minimizes the square error \(\|\bff-\bx\|^2 = (\bff - \bx)^\top(\bff-\bx)\), averaged (or summed) over training examples.

What are the sizes of the weight matrices \(W^{(1)}\) and \(W^{(2)}\)? Why is it usually not possible to get zero error for \(K\!<\!D\)?

Answer:

\(W^{(1)}\) is \(K\ttimes D\)

\(W^{(2)}\) is \(D\ttimes K\)\(\bff = (W^{(2)}W^{(1)})\bx\).

The geometry of this transformation was discussed in the answer to the maths background self-test. The final multiplication by \(W^{(2)}\) brings points in \(K\)-dimensions up into \(D\)-dimensions, but the \(\{\bff^{(n)}\}\) points will still all be in a \(K\)-dimensional linear subspace. Unless the \(\{\bx^{(n)}\}\) points happen to lie exactly in a \(K\)-dimensional linear subspace, they can’t be fitted exactly.

An alternative explanation is that to get zero error, we need \(W^{(2)}W^{(1)} = \I\). However, this matrix product has rank at most \(K\), and \(\I\) has rank \(D\).

It’s common to transform a batch (or “mini-batch”) of data at one time. Given an \(N\ttimes D\) matrix of inputs \(X\), we set: \[\begin{align} \notag H &= XW^{(1)\top}\\ \notag F &= HW^{(2)\top} \end{align}\] The total square error \(E = \sum_{nd} (F_{nd} - X_{nd})^2\), has derivatives with respect to the neural network output \[\notag \pdd{E}{F_{nd}} = 2(F_{nd} - X_{nd}), \quad \text{which we write as}~ \bar{F} = 2(F - X). \] Using the backpropagation rule for matrix multiplication, \[\notag C = AB^\top \quad\Rightarrow\quad \bar{A} = \bar{C}B ~~\text{and}~~ \bar{B} = \bar{C}^\top A, \] write down how to compute derivatives of the cost with respect to \(W^{(1)}\) and \(W^{(2)}\). If time: you should be able to check numerically whether you are right.

Answer:

Applying the rule given in the question: \[\begin{align} E &= \textstyle\sum_{nd} (F_{nd} - X_{nd})^2 & \bar{F} &= 2(F-X)\\ F &= HW^{(2)\top} & \bar{H} &= \bar{F}W^{(2)} ~\text{and}~ \bar{W}^{(2)} = \bar{F}^\top H \\ H &= XW^{(1)\top} & \bar{W}^{(1)} &= \bar{H}^\top X \end{align}\] The forwards computation goes from the bottom of the left column up to the top. Backpropagation then computes the second column from top to bottom. A demonstration of checking these expressions is available online.

The PCA solution sets \(W^{(1)}\te V^\top\) and \(W^{(2)}\te V\), where the columns of \(V\) contain eigenvectors of the covariance of the inputs. We only really need to fit one matrix to minimize square error.

Tying the weight matrices together: \(W^{(1)}\te U^\top\) and \(W^{(2)}\te U\), we can fit one matrix \(U\) by giving its gradients \(\bar{U} = \bar{W}^{(1)\top} + \bar{W}^{(2)}\) to a gradient-based optimizer. Will we fit the same \(V\) matrix as PCA?

Answer:

This question is mainly here just to show you an example of “parameter tying”. Sharing parameters in different parts of a model is common in machine learning. For example recent large word-based language models all use parameter tying, following https://arxiv.org/abs/1608.05859. It’s often easier to think about the parameters being separate at first, and then their derivatives just get added up.

The optimizer won’t set \(U\te V\). The cost function only cares about how well the output matches the input, so the globally optimum cost can be obtained by setting \(U \te VQ\), for any orthogonal matrix \(Q\). Then the function that is fitted is: \(\bff = (V Q Q^\top V^\top )\bx = (V V^\top)\bx\), which matches what a PCA reconstruction would do, and so has the same cost.

Any other reasonable argument is fine. For example, explaining that the hidden units can be permuted, and so there are multiple solutions.

In practice, gradient-based optimizers do converge to a solution with the minimum cost, However, we don’t think it’s totally straightforward to prove that they do. (We would welcome a neat proof or reference to add here.) The cost function is not convex in \(V\): an average of \(V\) and \(VQ\) does not give an optimal autoencoder.

Non-linear autoencoders

Some datapoints lie along the one-dimensional circumference of a semi-circle. You could create such a dataset, by drawing one of the features from a uniform distribution between \(-1\) and \(+1\), and setting the other feature based on that: \[\begin{align} \notag x_1\nth &\sim \text{Uniform}[-1,1]\\ \notag x_2\nth &= \sqrt{1- \big(x_1\nth{\big)}^2}. \end{align}\]

Explain why these points can’t be perfectly reconstructed when passed through the linear autoencoder in Q3 with \(K\te1\).

Answer:

The \(\{\bff^{(n)}\}\) points must all lie in a \(K\)-dimensional linear subspace. With \(K\te1\), the outputs of the autoencoder all lie along a straight line in the 2-dimensional data space. The original data are on a line, but not a straight line, and so can’t be matched perfectly.

Explain whether the points could be perfectly reconstructed with \(K\te1\) by some non-linear decoder: \(\bff = \bg(h)\). Where \(\bg\) could be an arbitrary function, perhaps represented by multiple neural network layers. Assume the encoder is still linear: \(h = W^{(1)}\bx\).

Answer:

We could set the weights so that \(h\te x_1\). Then set: \[\begin{align} g_1(h) &= h = x_1\\ g_2(h) &= \sqrt{1 - h^2} = x_2, \end{align}\] thus perfectly reconstructing any input.

Explain whether the points could be perfectly reconstructed with \(K\te1\) by some non-linear encoder: \(h = g(\bx)\). Where \(g\) could again be an arbitrary function, perhaps represented by multiple neural network layers. Assume the decoder is still linear: \(\bff = W^{(2)}h\).

Answer:

No matter how we set the non-linearity \(g\) here, the outputs lie along a straight line, and so cannot match the original data.