Computing logistic regression predictions using sampling based methods

In the previous note we approximated the logistic regression posterior with a Gaussian distribution. By comparing to the joint probability, we immediately obtained an approximation for the marginal likelihood \(P(\D)\) or \(P(\D\g\M)\), which can be used to choose between alternative model settings \(\M\), and we could use the Laplace approximation to make Bayesian prediction. We now look at other ways to make Bayesian predictions, not involving a Gaussian approximation.

1 Monte Carlo approximations

A route to avoiding Gaussian approximations is to approximate the prediction, which is an expectation, with an empirical average over samples: \[\begin{align} P(y\te1\g \bx, \D) &= \int \sigma(\bw^\top\bx)\,p(\bw\g\D)\intd\bw\\ &= \E_{p(\bw\g\D)}[\sigma(\bw^\top\bx)]\\ &\approx \frac{1}{S}\sum_{s=1}^S \sigma({\bw\sth}^\top\bx), \quad \bw\sth\sim p(\bw\g\D). \end{align}\] Our prediction is the average of the predictions made by \(S\) different plausible model fits, sampled from the posterior distribution over parameters.

However, it is not at all obvious how to draw samples from the posterior over weights for general models. For simple versions of linear regression, we know that \(p(\bw\g\D)\) is Gaussian, but we don’t need to approximate the integral in that case. For logistic regression there’s no obvious way to draw samples from the posterior distribution (if we don’t approximate it with a Gaussian).

A family of methods, widely used in Statistics, known as Markov chain Monte Carlo (MCMC) methods, can be used to draw samples from the posterior distribution for models like logistic regression and neural networks. We don’t cover the details of MCMC in this course. If you’re interested, Iain has a tutorial here: https://homepages.inf.ed.ac.uk/imurray2/teaching/15nips/ — or a longer tutorial on probabilistic modelling that puts it in slightly more context: https://homepages.inf.ed.ac.uk/imurray2/teaching/14mlss/

1.1 Importance Sampling

Importance sampling is a simple trick you should understand, because it comes up in various contexts in machine learning beyond Bayesian prediction1. Here we rewrite the integral as an expectation under an arbitrary tractable distribution of our choice, \(q(\bw)\): \[\begin{align} P(y\te1\g \bx, \D) &= \int \sigma(\bw^\top\bx)\,p(\bw\g\D)\,\frac{q(\bw)}{q(\bw)}\intd\bw\\ &= \E_{q(\bw)}\left[\sigma(\bw^\top\bx)\,\frac{p(\bw\g\D)}{q(\bw)}\right]\\ &\approx \frac{1}{S} \sum_{s=1}^S \sigma({\bw\sth}^\top\bx)\,\frac{p(\bw\sth\g\D)}{q(\bw\sth)}, \quad \bw\sth\sim q(\bw). \end{align}\] Here \(r\sth = \frac{p(\bw\sth\g\D)}{q(\bw\sth)}\) is the importance weight, which upweights the predictions for parameters that are more probable under the posterior than the distribution we sampled from.

We shouldn’t divide by zero, so we need \(q(\bw)>0\) when \(p(\bw\g\D)>0\). Moreover, we don’t want \(q(\bw)\ll p(\bw\g\D)\) for any region of the weights, or occasionally we would see an enormous importance weight, and the estimator will have high variance.

The final detail is that we can’t usually evaluate the posterior \[ p(\bw\g\D) = \frac{P(\D\g\bw)\,p(\bw)}{P(\D)}, \] because we can’t usually evaluate the denominator \(p(\D)\). However, we can approximate that using importance sampling! \[\begin{align} P(\D) &= \int P(\D\g\bw)\,p(\bw)\intd\bw\\ &= \int P(\D\g\bw)\,p(\bw)\,\frac{q(\bw)}{q(\bw)}\intd\bw\\ &= \E_{q(\bw)}\left[\frac{P(\D\g\bw)\,p(\bw)}{q(\bw)}\right]\\ &\approx \frac{1}{S} \sum_{s=1}^S \frac{P(\D\g\bw\sth)\,p(\bw\sth)}{q(\bw\sth)} = \frac{1}{S} \sum_{s=1}^S \tilde{r}\sth, \end{align}\] where we’ve introduced “unnormalized importance weights”, defined as: \[ \tilde{r}\sth = \frac{P(\D\g\bw\sth)\,p(\bw\sth)}{q(\bw\sth)}. \] Substituting in this approximation to the Bayesian prediction, we obtain: \[ P(y\te1\g \bx, \D) \approx \frac{1}{S} \sum_{s=1}^S \sigma({\bw\sth}^\top\bx)\,\frac{\tilde{r}\sth}{\frac{1}{S}\sum_{s'=1}^S \tilde{r}^{(s')}}, \quad \bw\sth\sim q(\bw) \] or \[ P(y\te1\g \bx, \D) \approx \sum_{s=1}^S \sigma({\bw\sth}^\top\bx)\,r\sth, \quad \bw\sth\sim q(\bw). \] In this final form, the average is under the distribution defined by the ‘normalized importance weights’: \[ r\sth = \frac{\tilde{r}\sth}{\sum_{s'=1}^S \tilde{r}^{(s')}}. \]

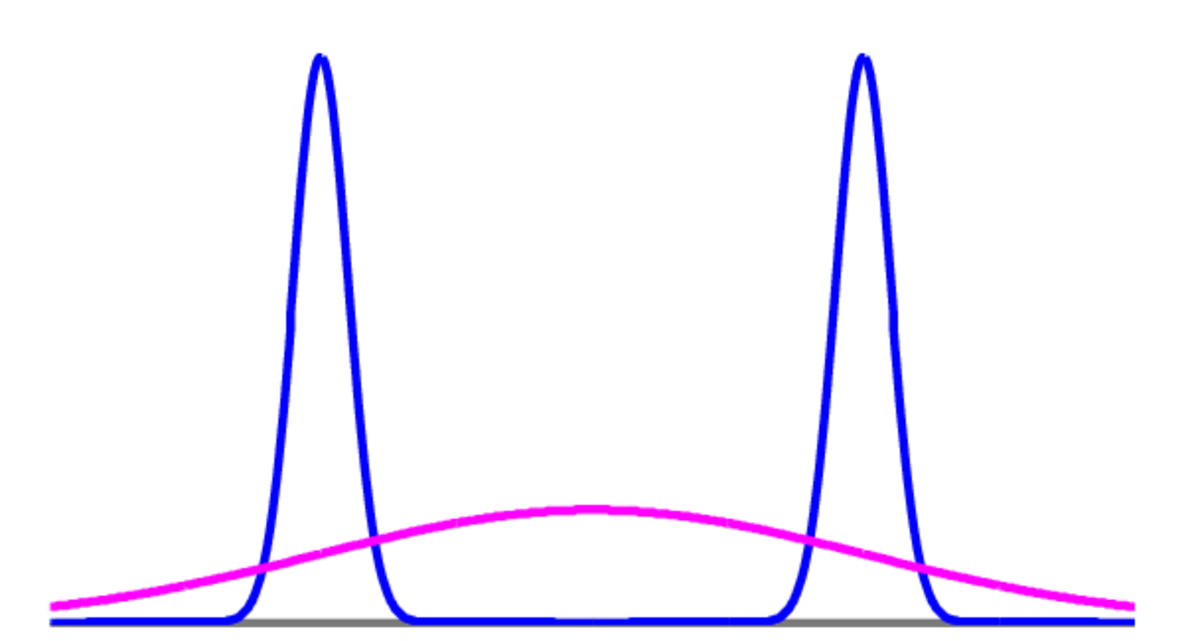

Consider a 1-dimensional bimodal posterior \(p(w\g\D)\) and \(q(w)\) a Gaussian centred at the trough of \(p(w\g\D)\) as shown in the figure below.

1.2 Importance sampling with the prior

A special case might help understand importance sampling. If we sampled model parameters from the prior, \(q(\bw) = p(\bw)\), the unnormalized weights are equal to the likelihood, \(\tilde{r}(\bw) = P(\D\g\bw\sth)\).

We would sample some number, \(S\), settings of the parameters from the prior. Then we form a discrete distribution over these parameters with importance weights proportional to the likelihood. Functions that match the data will be given large importance weight.

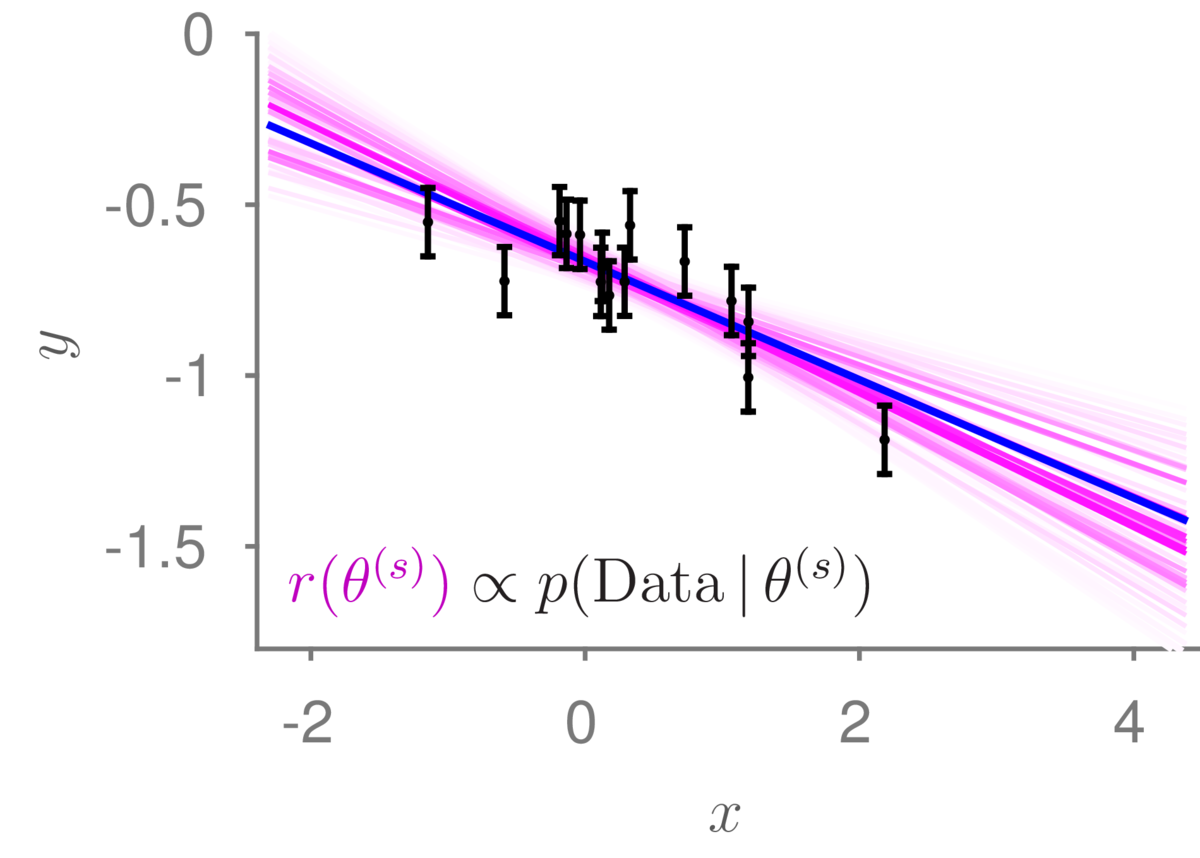

Below is a linear regression example, where the true (unknown) line is shown in blue, and the purple lines show the discrete distribution over possible models we will use for prediction. The intensity of the lines indicate the importance weights. I drew 10,000 samples from the prior, but most of the functions didn’t go near the data and were given such small weight that they are nearly white.

This importance sampling procedure works in principle for any model where we can sample possible models from the prior and evaluate the likelihood, including logistic regression. However, if we have many parameters, it is unlikely that any of our \(S\) samples from the prior will match the data well, and our estimates will be poor.

We could try to make the sampling distribution \(q(\bw)\) approximate the posterior, but for models with many parameters it is difficult to approximate the posterior well enough for importance sampling to work well. Advanced sampling methods like MCMC (mentioned above) and more advanced importance sampling methods (e.g., Sequential Monte Carlo, SMC) have been applied to neural networks, but are beyond the scope of this course.

For example reweighting data in a loss function to reflect how they were gathered, or weighting the importance of different trial runs in reinforcement learning, depending on the policy from which they were sampled.↩︎